In a coding module at Staffordshire University, a group of cybersecurity students sat in silence.

Not the good kind of silence, where you’re scribbling notes and frowning at a tricky idea.

The silence you get when you realise the room doesn’t really need you there.

For forty minutes, they watched AI-generated slides with an AI voiceover - smooth, professional, perfectly paced. Then a server glitch hit. For thirty seconds, the voice slipped into a rapid Spanish accent before snapping back to the original English accent.

The avatar didn’t pause. Didn’t apologise. Didn’t notice.

It wasn’t there.

James, a mid-career student retraining in cybersecurity, described what followed as a “collective plummet.” He’s not a fresh graduate; he’s betting what feels like his last big pivot on this course.

“I don’t feel like I can restart again,” he told The Guardian. “I’m stuck with this course.”

The student handbook at Staffordshire explicitly bans AI-generated work as academic misconduct. Hand in an AI-written assignment and you’re in trouble.

The staff, meanwhile, had a quietly posted policy that supported ‘AI automation’ for teaching.

Students can’t use it. Staff can. Zero tolerance for you. Zero friction for them.

This is more than a glitch. It’s a symptom of Academic Shrinkflation: removing the most expensive ingredient, human attention, while charging full price for the degree.

It’s like paying for a gym and discovering they’ve swapped half the weights for massage chairs.

You still get a membership card. You still get access. But the thing you actually needed - resistance has been designed out.

The hard part is that this doesn’t feel bad at first. A pre-recorded AI lecture is tidy, efficient, and painless. No awkward questions. No messy explanations. No tutor who’s tired or running late.

It’s easy to nod along to that story of ‘efficiency’. It’s harder to notice what’s quietly disappearing in your own brain.

So I want you to run a quick experiment with any AI chatbot.

Pick a topic that genuinely stretches you: quantum entanglement, options pricing, anything that usually makes your eyes glaze over.

Room A: The Butler (The Hollow Degree)

Goal: Satisfaction.

Copy this Prompt: “Explain this as simply as possible. Make it feel easy. Don’t ask me questions. Validate my intelligence. Keep it effortless.”

You’ll probably feel clever in ten minutes. The answers will be smooth. You’ll get tidy metaphors. You’ll feel like you’ve “got it”.

Room B: The Spotter (The Human Premium)

Goal: Competence.

Copy this Prompt: “Teach me like a Socratic coach. Ask what I already know. When I’m wrong, don’t fix it - ask me questions until I see it. Don’t move on until I really understand.”

This one is horrible. You’ll want to close the tab. You’ll want to Google the answer, just to stop the questions. You’ll feel clumsy and exposed, instead of smart and efficient.

That urge to quit? That’s the weight on the bar.

Learning isn’t supposed to feel like lying on a recliner. It’s supposed to feel like picking something up that’s heavy enough to challenge you.

If the weight is too light, nothing changes. If it’s too heavy, you give up.

That ‘just right’ weight is different for every mind, every age, every subject. And here’s the irony:

AI is the first tool we’ve ever had that could find that personalised sweet spot and adjust it in real time for each student. Globally.

Yet the way we’re deploying it in education looks less like a gym and more like a spa. Remove friction, smooth every edge and make content bingeable. Keep the customer happy.

Last month, I introduced you to Maria, the rancher whose groundwater is being drained to cool data centres. Her land is literally keeping the Cognitive Grid running.

If we’re asking Maria for her water and James for two years of his life, what do we owe them in return?

Burning a gigawatt of energy to power a Butler that writes your essay for you is a tragedy. Burning that same gigawatt to power a Spotter that teaches you how to think - that, at least, starts to look like a trade-off.

Because the cost isn’t abstract any more. It sits in Maria’s well. It sits in James’s bank account. It sits in the future attention span of every student who gets used to pressing play instead of picking up the bar.

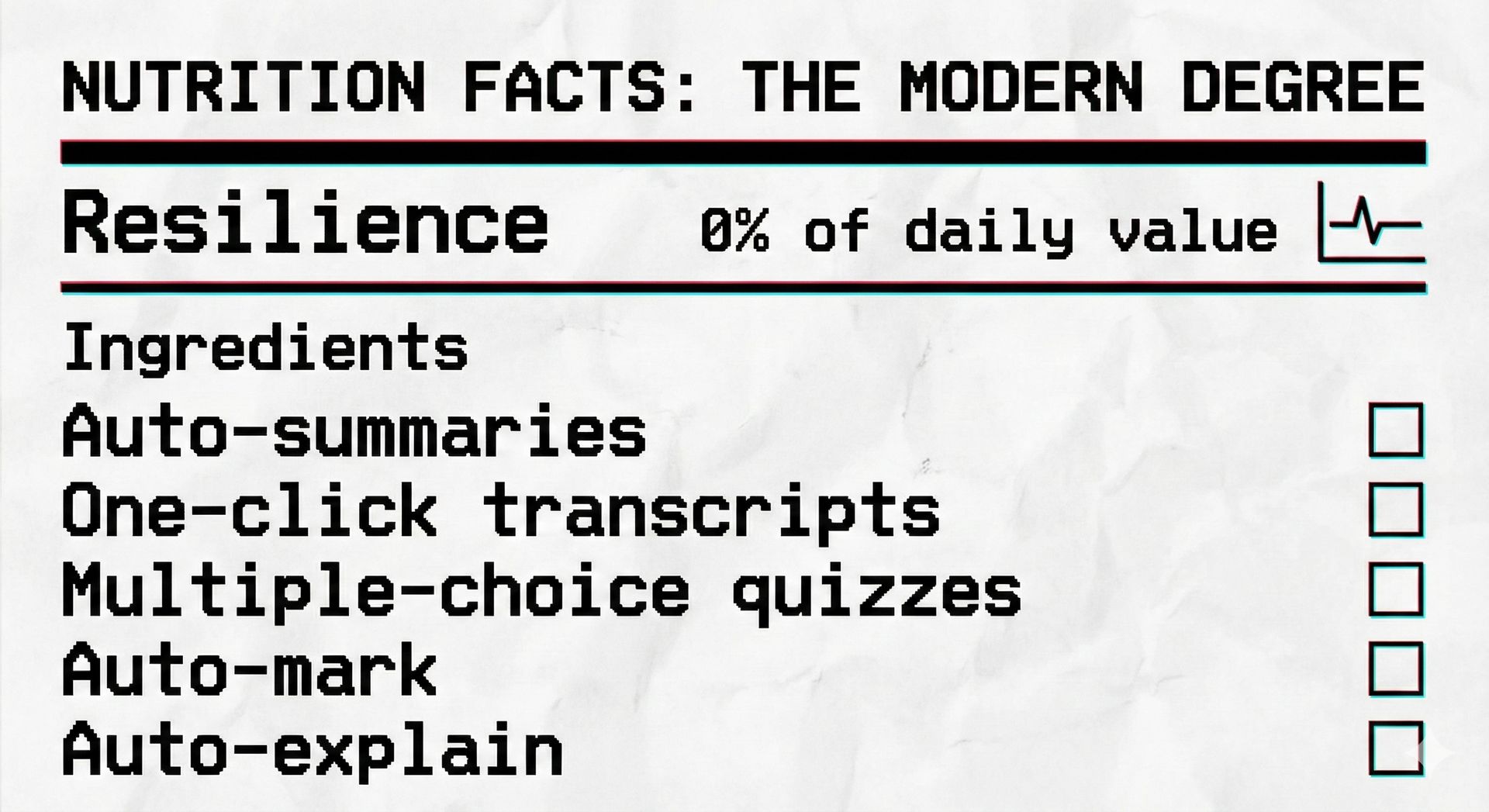

If we treated a degree like a diet, most modern courses would come with a warning label:

NUTRITION FACTS: THE MODERN DEGREE

It tastes good. It goes down fast. And underneath, the muscles that do real thinking start to atrophy.

The scandal isn't just that universities are cutting costs. It is that they are swapping whole foods for ultra-processed fillers.

We already know that efficiency is often the enemy of memory. Organisational psychologist Adam Grant recently highlighted 24 experiments showing that students perform better when taking notes by hand rather than on laptops.

Why? Because typing is too efficient. It allows you to transcribe a lecture verbatim without actually processing the meaning. Handwriting is slower. It creates friction. That friction is what forces the brain to encode the idea.

AI is simply the final evolution of the laptop. If the keyboard reduces friction enough to hurt learning, the "Butler" AI removes it entirely.

Real learning requires that ‘cognitive friction’- the nutritious pain of confusion. By using AI to smooth out the edges, we aren't making education more efficient. We are stripping it of its nutrients.

We often mistake this for a battle over technology, but the tool isn't the villain.

It’s not ‘Human vs AI’. That’s the wrong fight. It’s Competence vs Convenience.

Most systems today default to Convenience. They’re tuned for speed, fluency, and user satisfaction. They remove confusion as quickly as possible because confusion feels like a failure in the user experience.

But if you care about actual intelligence, confusion is not a bug. It’s literally the signal that the brain is reorganising itself around something harder.

For the next generation, that design choice won’t be visible. My niece is two years old. By the time she reaches university, ‘online’ and ‘offline’ won’t feel like different categories. There’ll just be ‘learning’, and it’ll all be wired into the same grid.

My fear isn’t that she’ll use AI. Of course, she will. I fear that she’ll be stuck with the default setting.

So here’s the alternative I’d like us to build together: a Learning Equaliser.

Imagine a dashboard of dials that control the Friction and Scaffolding in real time, tuned to the person in front of the screen.

For my niece (Age 2): Friction at 4/10, Scaffolding high. The AI turns physics into Minecraft blocks and gentle puzzles.

For me (mid-career): Friction at 10/10, Scaffolding low. The AI acts like a relentless Socratic spotter, pushing me until I can defend an idea under pressure.

For James (retraining): Friction at 7/10, Scaffolding medium. Enough resistance that he really learns, enough support that he doesn’t walk away.

Try it out yourself: https://learning-equalizer.lovable.app

The engine is the same. The energy cost is the same. The design and the outcomes are completely different.

We already have the technology to build a customised, high-friction gym for every mind on the planet.

We need to stop designing them like spas.

We don’t need AI to make the lesson easier. We need it to make the learner stronger.

The Challenge: Try the spotter. If you wanted to quit after a few seconds, you hit the competence-building zone. If you felt clever the whole time, you're still enjoying the convenience.