In nutrition, a label changes behaviour. Same with cars, appliances, even lightbulbs. But AI? We run billions of queries a day with no idea what they cost - in energy, in emissions, in infrastructure.

What if every AI model came with a label - clear, comparable, impossible to ignore? And if the ingredients - the data used to train the model, the carbon cost, the energy mix - were right in front of you, how long before you started choosing differently?

We talk about AI as if it lives in the cloud - frictionless, weightless. But every prompt has a physical journey. It starts at your keyboard, travels through cables to racks of humming GPUs, into a data center the size of a football field and then - at some moment you’ll never see - draws power from a grid somewhere in the world.

That grid could be running on wind and solar. Or it could be a gas plant down the road spinning up to meet demand. Either way, your request ends in the air we all breathe.

It’s the supply chain of a chat. And like most supply chains, it’s invisible until you decide to trace it.

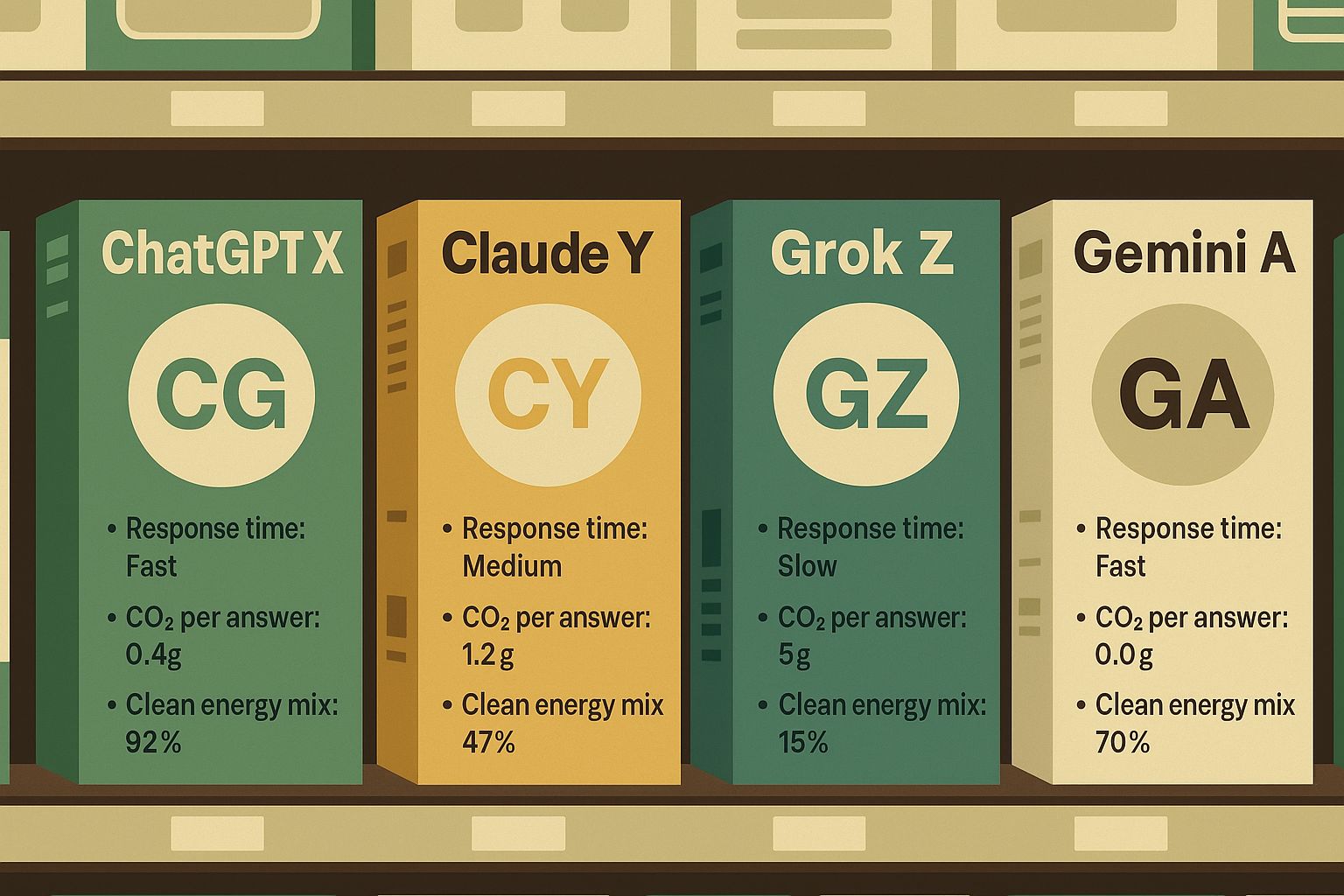

Imagine walking into a supermarket where AI models sit on shelves like cereal boxes. Each one has a front-of-pack label:

Response time: Fast / Medium / Slow

CO₂ per answer: 0.4g / 1.2g / 5g

Clean energy mix: 92% / 47% / 15%

Which one would you pick? And how would developers compete if those numbers were public?

We know this works. Nutrition labels changed food marketing. Fuel economy stickers changed car buying. Even energy labels on fridges shifted the market.

When we can see the externality, it becomes part of the choice.

The footprint of an AI response depends on a few things:

Model size: Bigger models = more compute per request.

Grid timing: Run the same prompt when the sun is up in California and emissions drop.

Routing: Sending a UK user’s prompt to a US data center adds unnecessary energy cost.

Recent headlines make this real. Google’s emissions up 51% since ramping AI. Microsoft’s new carbon removal deals making waves - and raising eyebrows. Time magazine found some prompts can be 50× more carbon-intensive than others.

It’s a reminder that not all “thinking” is equal. Some answers are cheap, others are costly - we just don’t see the bill.

The irony is that the same systems driving this energy demand could also be part of the fix. AI is extraordinarily good at optimisation - from scheduling data center workloads for clean-energy hours to modelling grid demand and reducing waste in industrial processes.

In fact, DeepMind used machine learning to slash Google’s data center cooling energy by 40%, cutting overall energy use by around 15% - without compromising performance. That’s the kind of win we could scale if incentives, transparency and regulation were aligned.

But potential doesn’t guarantee impact. Without the right rules and rewards, the path of least resistance will always be toward bigger, faster, more power-hungry models. The question isn’t just can AI solve this - it’s whether we’ll design the incentives and governance to make it unavoidable.

If that bill were visible, you might treat AI like home energy - using lighter models for quick asks, running bigger jobs when the grid is cleaner, batching prompts instead of drip-feeding them. Over time, “AI efficiency” could feel as normal as recycling or switching off the lights - not from obligation, but because the impact is visible. And if enough people made those shifts, businesses would design for efficiency and governments would have to set the rules.

The point of a label isn’t guilt - it’s awareness. Numbers can be gamed, but transparency still matters because it changes what’s possible.

If any number on that label makes us uneasy, the problem isn’t just the AI. It’s the way we’ve built our incentives and our appetite for “bigger, faster, smarter” without stopping to ask: at what cost?

AI is not the first technology to hide its environmental bill. But it might be the first where we can make that bill visible in real time - if we choose to.

If your next AI prompt came with a label, what would you want to see on it?

Learn More: